A paradigm shift in the accessibility of Artificial Intelligence (AI) driven Computer Vision technologies means now is the time for business leaders to consider how these can deliver competitive advantage.

What used to be the domain of expensive dedicated products is fast becoming a standardised workflow, supported by huge bets from Cloud services providers. This, together with access to cheap, powerful hardware, is driving a reduction in costs and complexity of AI implementations, enabling organisations to efficiently deploy computer vision tools targeted at their unique challenges.

In this article, I’ll help tech-oriented business leaders identify applications for AI-driven computer vision, in particular, those real-time applications that are enabled by the new generation of powerful and accelerated AI hardware.

Contents

- Paradigm shift—train your own AI

- What you can do with $200 of hardware

- What are potential applications?

- Follow-up questions

- Limitations

- Integrating AI with other systems

Paradigm shift—train your own AI

Instead of buying “off-the-shelf” dedicated AI products for a specific problem like scooter counting, organisations can now think more generally about a problem they would like to solve and train their models using Machine Learning (ML) services.

Where this used to involve specialised development, it can now leverage defined processes supported by all the major Cloud service providers. Amazon, Microsoft Azure, and Google have all been investing heavily in building tools to make the process of training and deploying AI models as easy to access as any other Cloud services. The goal is to provide flexible, adaptable solutions, targeted at your application, at significantly reduced costs.

The ML approach to Computer Vision solutions begins with the same set of general requirements. You’ll need some way of collecting raw data through:

- Video or image capture (fixed or mobile camera, or even a drone)

- Local or Cloud processing to support model training

- Tooling and hardware to deploy and run the AI model, and (probably)

- Some support from a provider experienced in integrating AI solutions

What you can do with $200 of hardware

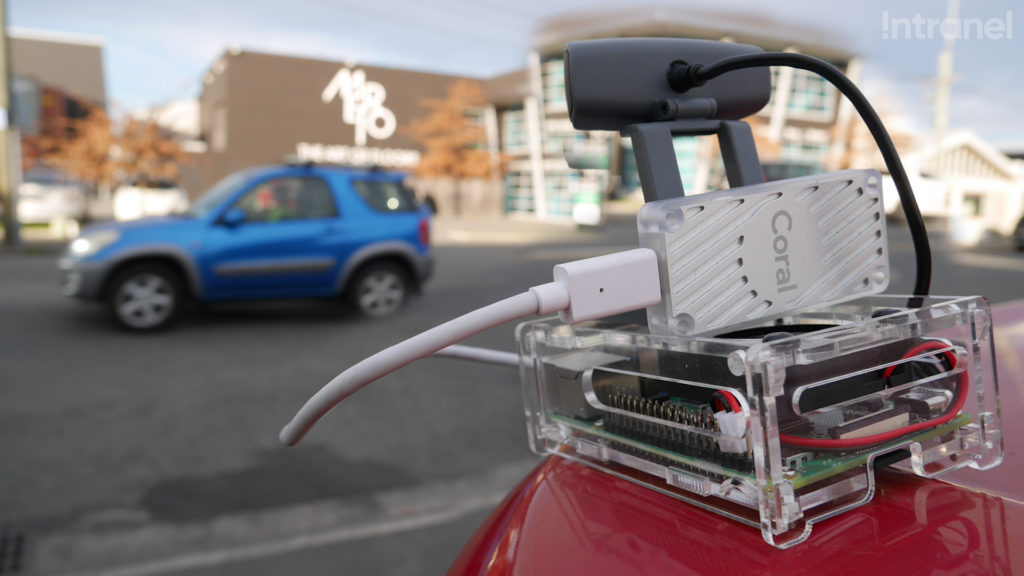

With the Coral programme coming out of beta in 2019, it is now possible for anyone to run powerful, hardware-accelerated AI computer vision applications on hardware that costs under $200. Smart, deep learning camera systems are also available from Amazon (AWS DeepLens) and Microsoft (Vision AI Developer Kit).

One of Intranel’s internal projects was tracking, differentiating, and counting different brands of scooters (e.g., Lime, Flamingo) in public areas in real-time—using only Coral accelerator hardware and a Raspberry Pi.

Recommended case study: Using AI for Object Training, Detection and Tracking

Using smart, local “edge” devices means that you don’t need high bandwidth connections to servers or cloud infrastructure (and costly server-side processing). For instance, if an AI-enabled camera can count pedestrian movements using local hardware, it only needs to send tiny snippets of data like pedestrian movements in a given period through an inexpensive IoT channel, rather than stream video continuously to a server.

Another key advantage of performing ML inferences locally is keeping user data private. There is no need to stream or store personally identifying video information. Collectively, this shift to powerful, low-cost field devices adds up to a step-change in the industry.

In the video below, you can see cars captured and tracked in real-time. Note that each individual vehicle is recognised as a unique instance that could be tracked across multiple video feeds.

The algorithm can be updated and retrained to add tracking of bicycles, pedestrians, or any other object. Adding additional functionality such as counting vehicles heading in each direction becomes straightforward once objects can be reliably identified.

What are potential applications?

Imagine a pool of resources that is able to observe interactions, conduct quality control, and collect data, as well as raise alerts and provide inputs to other systems. On top of that, these resources are reliable, fast to train, and happy to work 24 hours a day for free.

These types of AI-driven computer vision technologies have been successfully deployed for several years, demonstrating that what a human can visually detect and react to in many environments can be automated and, in many cases, improved on. In the past, these technologies have only been available to companies with significant budgets. Now the playing field has changed and this is essentially available to anyone.

IMPORTANT

When evaluating potential use cases in your context, the broad question is:

Can you add value by automating the recognition of objects, characteristics, or events, and tracking them in a video while extracting key data?

This could cover a wide range of applications. Here are a few potential use cases:

- Tracking and counting people, vehicles, or other objects moving through a scene

- Grading product quality, or detecting flaws

- Detecting scenarios that increase the probability of an event or accident occurring

- Estimating physical characteristics such as size, weight, or velocity of objects, e.g., fibre measurement

- Detecting specific events, and pushing a notification to other systems

As well as new applications it’s worth considering options to add intelligence to existing products or processes. For instance, if you build monitored security cameras you might want to add smarts to highlight those feeds where people or vehicles are present.

More specific examples include:

- Detection of traffic accidents at intersections

- Recognising altercations or suspicious activity (e.g., shoplifting) from security video feeds

- Monitoring distractions for drivers or machinery operators

- Face or voice recognition for identity verification

- Licence plate recognition

- Crop or stock monitoring from drone feeds in agricultural applications

- Species recognition, including weed, disease, or pest detection

Follow-up questions

If you can see potential opportunities, consider evaluating your current infrastructure to figure out if you can leverage it to kickstart your AI project.

Here are the three questions that you should answer:

(1) Do you have existing video data or live streams from existing infrastructure?

If you are already set up with raw data feeds, you can leverage these and potentially don’t need to install new hardware.

(2) Can you use an existing trained dataset?

If you want to detect or track objects like people or cars, chances are you can access an existing model. If you want to look for blemishes on a specific type of fruit, you may need to train your own AI model.

(3) Do you have (or can you capture) plenty of examples of the object you want to track or the event you want to detect?

If you’re training a new AI model, it helps to have plenty of recorded examples of what you want to detect. Recognition techniques in ML are not limited to video. Similar approaches can be applied to audio data or other data sets, recognising any kind of recurring pattern.

Limitations

Standard trained models are very good at recognising particular objects or object characteristics in video or image data. Sometimes additional image processing techniques are required. For instance, standard object recognition may be good for identifying license plates in an image, but you may want to apply a different algorithm to automate recognising the actual license plate number.

In these cases, it may be necessary to overlay different techniques to extract the information that is most valuable, including leveraging conventional image processing techniques.

At Intranel, we are working on generalising not just object recognition, but tracking individual instances of moving objects and “event” recognition—being able to detect defined events in the video. This might include an abnormal event in a production environment, a collision between vehicles, or specific interactions between people.

Get in touch with our team to kickstart your AI project.

Integrating AI with other systems

AI-powered vision systems generate data so you will also need a plan to optimally use this. How will it integrate with your existing business processes and systems? Some computer vision AI systems may generate large amounts of data that require other AI techniques to extract maximum value, e.g., predictive analytics.

Final thoughts

AI tooling for Computer Vision applications is going mainstream, with the costs and complexity of hardware and applications around the technology shrinking rapidly. With modest budgets, organisations can now train and deploy dedicated computer vision algorithms exactly matched to their applications.

Previously they were limited to off-the-shelf products or major internal product development. Applications are broad and not always immediately obvious, which means business leaders need to carefully consider potential use cases within their sector.

If you are looking to deploy AI solutions, our team will be able to help with both the core models and the (equally vital) integration work for your environment, including:

- Overcoming challenges around data management and maturity

- Extracting “hard” data from Computer Vision AI models, e.g., people or vehicle counting or tagging specific events

- Building UX, dashboards, and visualisations

- Implementing integrations between Computer Vision, IoT, and Cloud systems

- Integrating AI with client-facing Web and Mobile services

If you’d like to learn more about how AI can transform your business and help you stay ahead of the curve, get in touch with us and let’s talk about your challenges.